Products

For Merchants

For Platforms and Banks

Professional Services

Resources

Help center

This year, Omise is pushing to become an “AI-first” company, embedding intelligence into both our operations and the products we build. This shift raises important questions: How do you move faster without introducing new risks? How do you adopt powerful tools while staying compliant and in control?

These questions take on particular weight in security.

Earlier this month, we spoke with Hardy Mansen, Security Manager at Omise, about how his team is using AI in practice, and how they are balancing productivity, risk, and responsibility while working alongside a new kind of partner.

For Hardy, the connection between security work and AI felt natural from the start. “We’ve found that generative AI and the work of a security team go very well together,” he says.

“We’re constantly dealing with questions that don’t have an easy yes or no answer,” Hardy explains. “We make risk-based decisions with incomplete data.” In that context, AI’s probabilistic nature feels very familiar.

The team does not treat AI output as truth, but as one input among many. Paired with human judgment, it helps surface insights faster while keeping the quality intact.

Because the security team covers the full business security lifecycle, AI shows up in many parts of their daily work. “We provide internal tooling for the organization, and many of our technical and non-technical activities happen in text editors. From there, we’ve enabled AI agents and workflows such as coding assistance, AI chat and different MCP servers.”

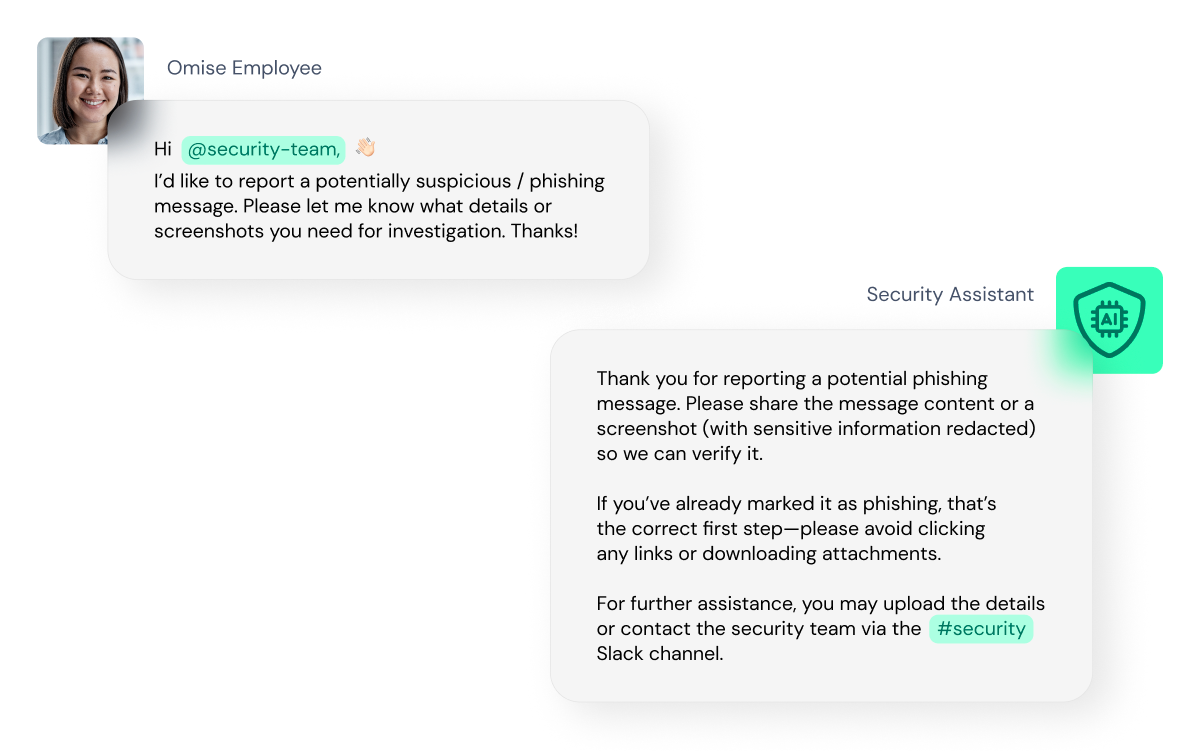

One of the most visible examples is an internal security chatbot. The bot answers security questions, provides guidance, and helps employees think through risks. “For example, when employees receive suspicious emails, the assistant helps them analyze what they’re seeing and decide what to do next,” Hardy says

According to Hardy, the security team has built AI applications that bring security into the software development process from the very beginning.

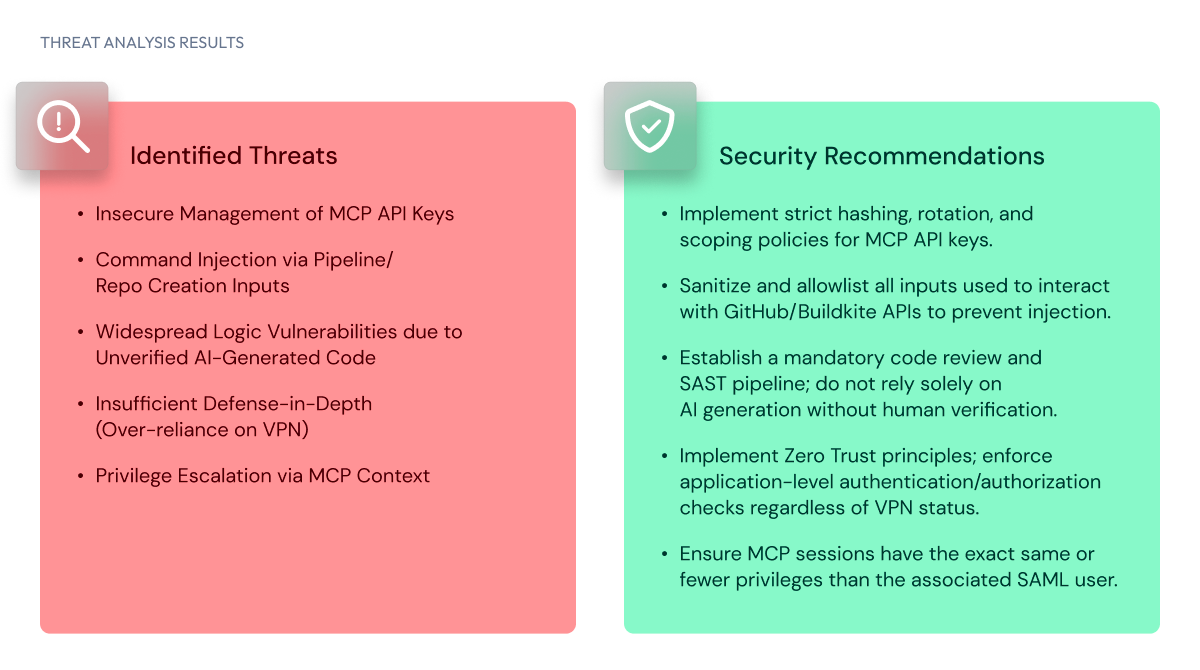

While design reviews and security standards are common in the industry, they are often applied selectively or later in the development process. At Omise, every piece of software goes through a mandatory internal pipeline that starts with a design document.

That document is analyzed by an internal application developed by the security team, which performs an initial threat model using a hybrid approach that combines generative AI with established standards such as OWASP ASVS. Engineers then receive a set of potential risks and relevant security requirements they can carry into ongoing reviews, helping teams address security concerns while designs are still flexible and easy to change.

Security involvement also starts at the product level. When product teams create user stories for major features, the security team uses AI to automatically generate abuse stories written from the perspective of potential attackers.

“There’s Simon the script-kiddie, who relies on tools anyone can download from the internet,” Hardy explains. “Then there are more serious personas like Fraudster Fred or Harry the Hacker.”

With those personas in mind, the system explores how a feature could be misused or exploited. Each set of abuse stories becomes a separate ticket, helping product teams see what could go wrong and where to follow up with security or engineering before problems surface in production.

Keeping Omise safe starts with strong foundations. AI systems used internally, or provided to customers, must meet strict privacy requirements and cannot train on company or customer data. Vendors are reviewed across legal, risk, and security before they are approved.

As AI becomes part of everyday work, the security team has expanded its focus to include how these tools are used in practice. They provide clear internal guidelines that outline appropriate use cases, required security controls, and common fallacies to avoid. To support this, the team publishes training materials that employees can learn on demand, without waiting for scheduled sessions or external resources.

“We wanted people to understand the risks as much as the benefits,” Hardy says. “AI is powerful, but it still requires human judgment.”

When asked about issues the team has encountered with AI, Hardy acknowledges critical risks such as prompt injection, but points to a less obvious challenge the team uncovered when MCP was first introduced.

“MCP usage itself was safe, but it led to a growing number of requests for API keys to internal services. If left unaddressed, that would have spread static credentials across employee laptops.” Since then, MCP has added support for OAuth, allowing users to authenticate using existing methods.

“Secondary effects like this are common with new technologies,” Hardy says. “That’s why we watch for emerging risks, put better controls in place, and keep educating people on how to use AI safely.”

Using these tools extensively within the security team helps inform that work. “The more we work with them ourselves,” he adds, “the better we understand where the real risks are.”

As Omise moves further toward becoming an AI-first company, the security team expects new complexity to come with it. For them, that challenge is part of the appeal.

“Using these tools has been a success for us so far,” Hardy says. “And I’m sure we’ll find more interesting problems to solve as we go.”

Rather than replacing judgment, AI is becoming a way to extend it. For the Omise security team, the future lies in understanding where human experience and intelligent tools work best together, and shaping that balance as the company continues to evolve.